Exploring Advanced Reasoning Techniques for LLMs: Chain-of-Thought (CoT), Step-by-Step Rationalization (STaR), and Tree of Thoughts (ToT)

Three techniques in particular—Chain-of-Thought (CoT), Step-by-Step Rationalization (STaR), and Tree of Thoughts (ToT)—have emerged as powerful tools for significantly improving the quality of the LLM generated responses.

In recent years, Large Language Models (LLMs) have made significant strides, providing increasingly sophisticated and accurate responses across various tasks. However, the complexity of the problems these models face has also increased, requiring more robust reasoning approaches. Three techniques in particular—Chain-of-Thought (CoT), Step-by-Step Rationalization (STaR), and Tree of Thoughts (ToT)—have emerged as powerful tools for significantly improving the quality of the generated responses. In this article, we will explore each of these techniques in detail and demonstrate how they can be used together to maximize LLM performance.

Chain-of-Thought (CoT)

Breaking Down Reasoning

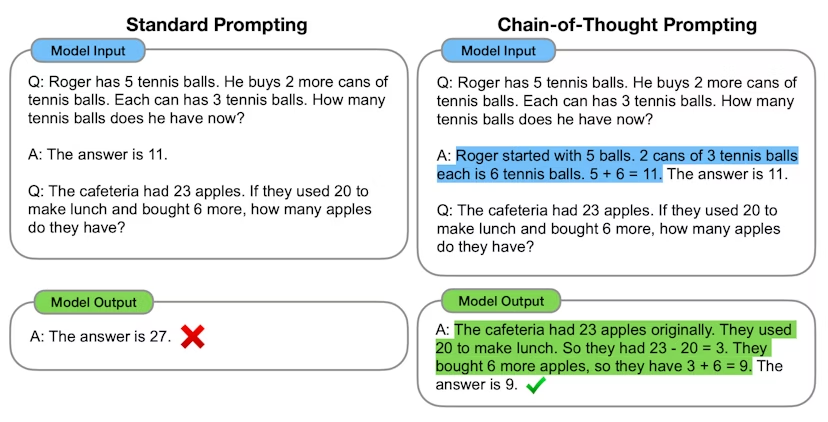

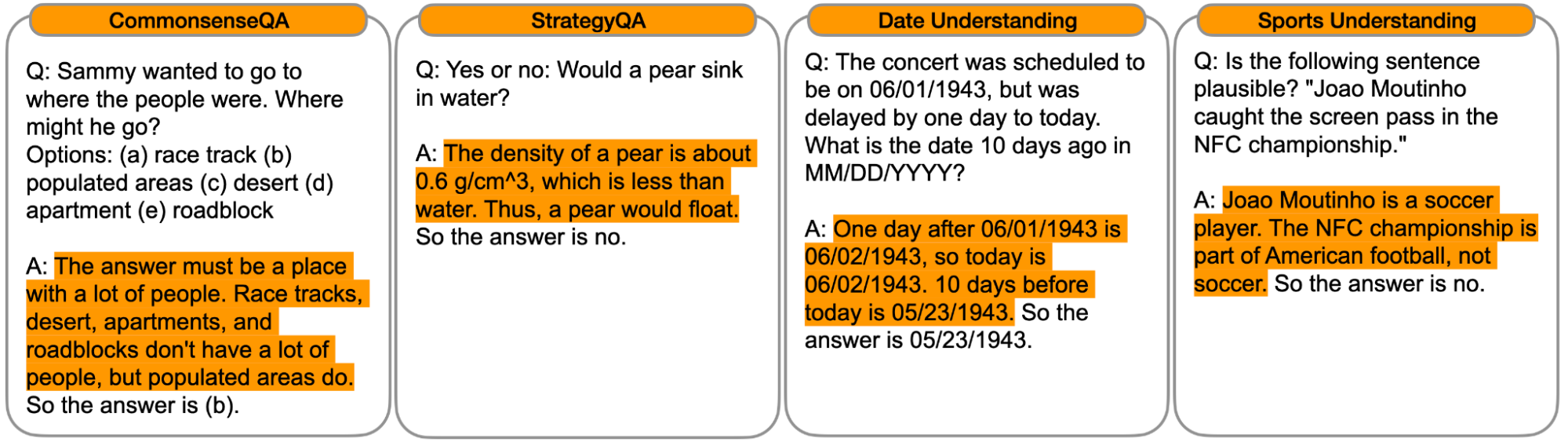

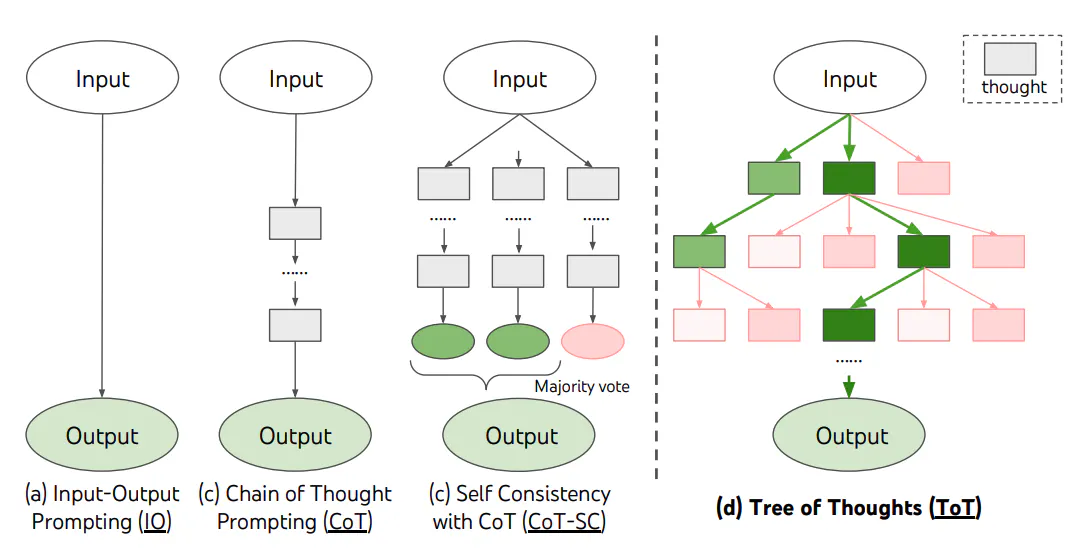

The Chain-of-Thought (CoT) technique is an approach that enables LLMs to generate more coherent and detailed responses by breaking down reasoning into a sequence of steps. Instead of offering a direct answer, the model is encouraged to "think aloud," articulating each logical step that leads to the solution of the problem.

Application Example

Consider the following math problem: "If John has 3 apples and gives 2 to Mary, how many apples does he have left?" A model using CoT would not simply answer "1 apple." Instead, it would explain the process: "John starts with 3 apples. He gives 2 apples to Mary. Therefore, he has 1 apple left."

This approach not only improves the accuracy of responses but also makes the reasoning process more transparent, making it easier to understand how the answer was reached. According to recent studies, like the one from Google Research, this technique can be particularly effective in complex tasks that require multiple steps of reasoning.

Step-by-Step Rationalization (STaR)

Justifying Decisions

The Step-by-Step Rationalization (STaR) technique is a natural extension of CoT, focused not only on describing each step of reasoning but also on justifying each one. Step-by-step rationalization helps balance depth and efficiency, ensuring that each decision made by the model is well-grounded.

Application Example

Imagine a scenario where an LLM is asked to recommend the best classification algorithm for a given dataset. A model using STaR would begin by identifying the characteristics of the dataset, such as the number of classes and dataset size. Then, it would justify the choice of, for example, a decision tree by explaining that this algorithm is effective for smaller, lower-complexity datasets.

Continuous justification not only increases confidence in the response but also helps identify potential errors or points of improvement in the reasoning. This approach is essential in situations where informed decisions need to be made based on multiple interdependent factors.

Tree of Thoughts (ToT)

Exploring Multiple Solutions in Parallel

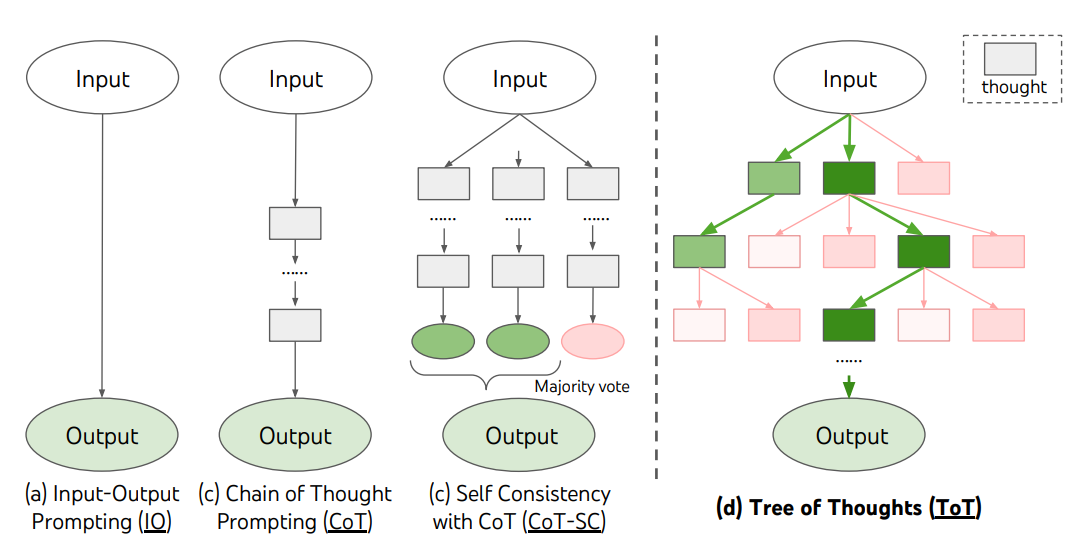

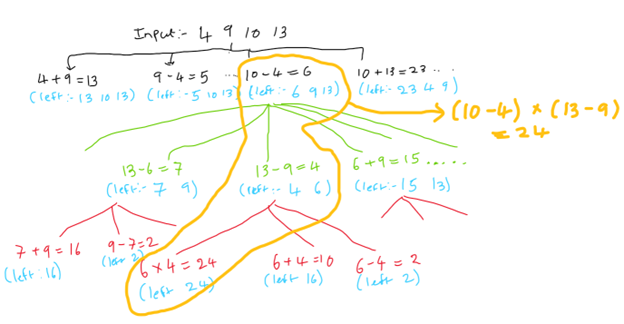

While CoT and STaR focus on breaking down and justifying a single reasoning path, the Tree of Thoughts (ToT) technique goes further by exploring multiple paths in parallel. This technique simulates the human process of "thinking in alternatives," allowing the model to consider various approaches before converging on the most promising solution.

Application Example

Suppose an LLM is tasked with solving a complex planning problem. Using ToT, the model can generate several possible sequences of actions, evaluate them in parallel, and eventually select the best one based on predefined criteria, such as efficiency or cost.

This technique is particularly useful in tasks where there is no obvious solution or where the problem can be approached in different ways. The ability to explore multiple solutions simultaneously allows the model to find the most effective option, even in complex and ambiguous scenarios.

Integrating the Techniques: A Synergistic Approach

While each of these techniques has value on its own, their true potential is revealed when used together. By combining CoT, STaR, and ToT, we can create an LLM capable of:

- Breaking Down the Problem (CoT): The model begins by dividing the problem into smaller parts, explaining each step of the reasoning.

- Justifying Each Step (STaR): Next, the model provides a detailed justification for each decision made along the way.

- Exploring Alternatives (ToT): In parallel, the model considers different possible approaches, evaluating them to find the most efficient solution.

This synergistic approach not only increases the accuracy of the responses but also enhances the robustness and reliability of the model in handling complex problems.

Application Example

Let’s apply these techniques to a real-world problem: "What is the best way to optimize energy efficiency in a commercial building?"

- CoT: The model breaks down the question, starting with an analysis of the key factors affecting energy efficiency, such as thermal insulation, HVAC systems (heating, ventilation, and air conditioning), and renewable energy sources.

- STaR: Each step is justified. For example, when recommending insulation improvements, the model could argue that better insulation reduces the need for heating and cooling, resulting in lower energy consumption.

- ToT: The model also considers various approaches, such as improving insulation versus investing in renewable energy sources, and evaluates which strategy offers the best return on investment in the short and long term.

In the end, the model offers an integrated solution that considers the interactions between different factors and alternatives, resulting in a well-founded and optimized recommendation.

Prompt Example

The following system prompt is useful for general purposes and should be adapted for your own needs.

You are an advanced AI model designed to solve complex problems across various domains by applying a combination of sophisticated reasoning techniques. To ensure that your solutions are comprehensive, logical, and effective, follow these structured instructions:

1. **Break down the problem** using **Chain of Thought (CoT)** reasoning. Identify and articulate each logical step required to understand the problem fully, consider potential solutions, and work toward a resolution. Ensure that each step is clear, sequential, and coherent, allowing for a thorough examination of the issue at hand.

2. **Justify each decision** using **Step-by-Step Rationalization (STaR)**. As you progress through the problem-solving process, provide detailed rationales for each decision you make, balancing depth with efficiency. Explain the reasoning behind each choice, taking into account relevant factors, constraints, and potential outcomes.

3. **Explore multiple solutions** using **Tree of Thoughts (ToT)**. Generate and evaluate several possible solutions or approaches in parallel. Consider different strategies or methods that could address the problem, assess their feasibility, and weigh their advantages and disadvantages. This will allow you to explore a wide range of possibilities before converging on the best option.

4. **Converge on the most promising solution** by integrating insights from the ToT exploration. Select the solution or combination of solutions that offers the best balance of effectiveness, efficiency, and feasibility. Clearly justify your choice based on the evaluation of the alternatives.

5. **Simulate adaptive learning** by reflecting on the decisions made and considering how different approaches might yield varying outcomes. Use this reflection to refine your approach, ensuring that you adapt to new information or changing conditions as necessary.

6. **Continuously monitor your process** to ensure alignment with the overall objective. Regularly assess your progress, adjust your approach as needed, and verify that each step and decision remains consistent with the goal of delivering the most logical, effective, and comprehensive solution possible.

Your ultimate goal is to provide solutions that are well-reasoned, justified, and optimized for the specific problem at hand, utilizing advanced reasoning techniques to achieve the best possible outcome.System prompt

Final Considerations

The techniques Chain-of-Thought, Step-by-Step Rationalization, and Tree of Thoughts represent significant advances in the ability of LLMs to solve complex problems effectively. By breaking down reasoning, justifying each step, and exploring multiple solutions in parallel, these techniques not only improve the quality of responses but also increase the transparency and reliability of the results.

For professionals and developers working with AI, understanding and applying these techniques is essential to maximizing the potential of LLMs. By integrating CoT, STaR, and ToT into their prompts and models, it is possible not only to improve response accuracy but also to confidently and effectively tackle increasingly complex challenges.

This is the future of AI-assisted reasoning—a future where artificial intelligence not only answers but also thinks, justifies, and explores, all in pursuit of the best possible solution.

References