How Artificial Intelligence Is Changing the Game: Deepfakes, ComfyUI, and the New Challenges of Digital Security

In this article, I’ll share a personal experience using these tools and how AI is transforming the world of images, along with detailing the process I followed to train models and test their capabilities.

The advancement of AI technologies in image generation has reached such a surprising level that what once seemed like science fiction has become a fascinating and terrifying reality. Tools like Stable Diffusion and Flux, combined with platforms such as Civit.ai and ComfyUI, are redefining what it means to create and manipulate images. This evolution impacts everything from entertainment to more serious areas like digital security, raising ethical questions about authenticity and trust.

In this article, I’ll share a personal experience using these tools and how AI is transforming the world of images, along with detailing the process I followed to train models and test their capabilities.

Video telling this story and showing how to procedure with the model training

Playing with AI: Photos That Fool Even Your Family

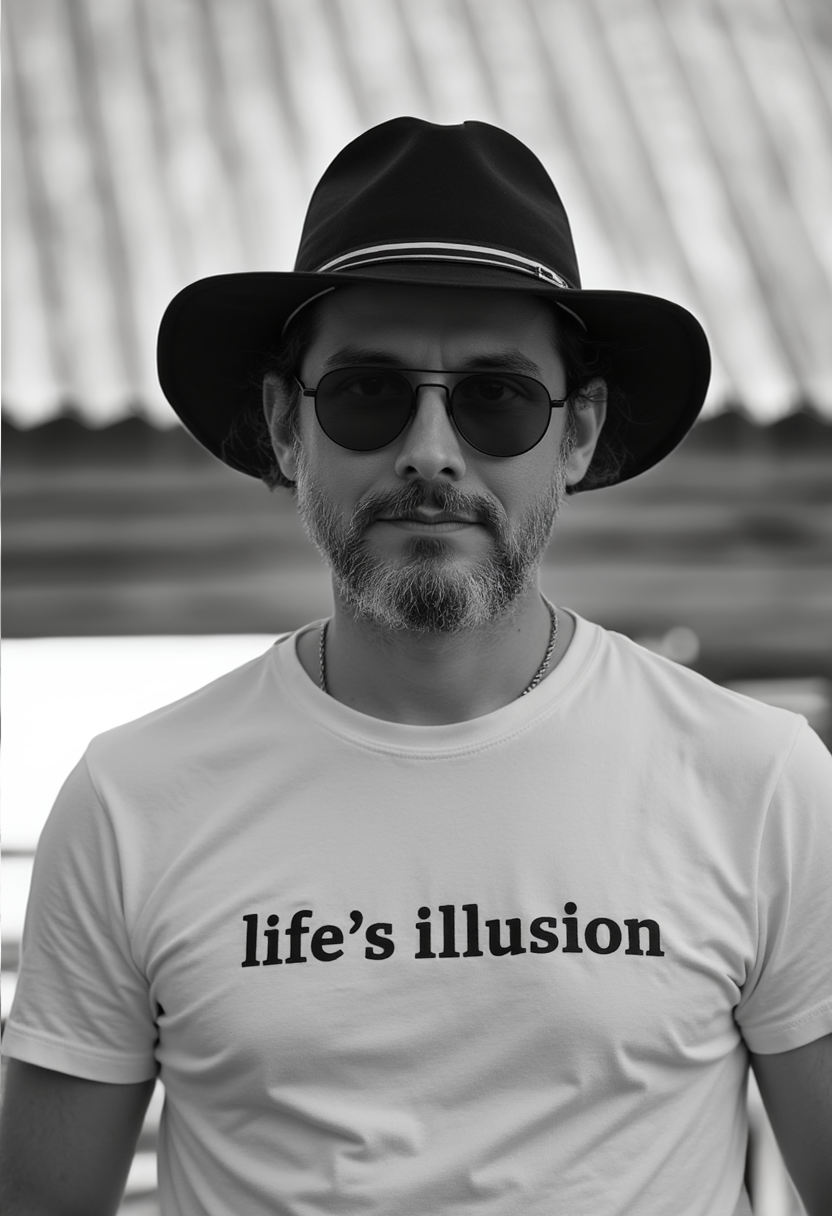

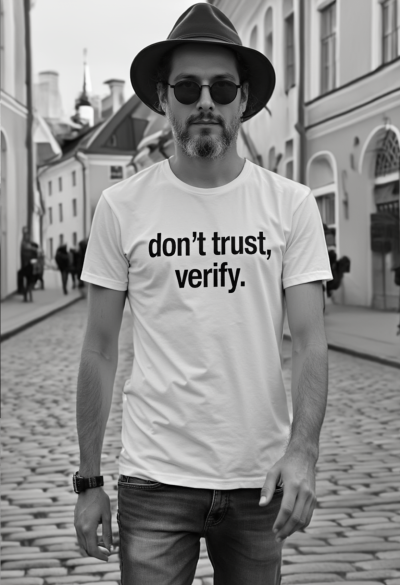

The idea of creating hyper-realistic images with AI led me to test a photo generation model. With the help of Civit.ai and ComfyUI, I trained a model based on 21 personal photos from my phone, resized to 512x512 pixels and removed backgrounds. The training took about 44 minutes and cost less than $5. After training, I was able to generate images that looked extremely real, which opened up a range of possibilities for testing the limits of this technology.

As part of a fun experiment, I decided to play with the tool by creating a series of “my” photos in unusual situations. One of the funniest examples was an image of me playing with a bear in Estonia. The photo quality was so good that my wife had the idea: Would the family believe this could be real?

I decided to send the images to my family’s WhatsApp group. What would their reaction be upon seeing “professional” photos of me, especially the one where I was playing with a bear? To my surprise, everyone believed it! My mom, siblings, and even close relatives fell for the prank, commenting on how incredible the photo looked and complimenting its style. My mom, for example, was genuinely impressed and thought I had done a studio photo session. After the buzz, I explained what had happened and highlighted how scams will evolve from here on.

This reaction was a practical demonstration of how convincing AI-generated images can be. If my own family, who knows my style and the kinds of things I usually do, believed the authenticity of the images, imagine what could be done in more serious contexts, like online scams and fraud.

Pictures sent to the family group

The Process: Training a Model with Flux

The core of this experiment was model training. For this, I used Flux, one of the most advanced image generation models available on Civit.ai. With a size of 22 gigabytes, Flux is capable of creating high-quality images, especially of faces and human bodies, outperforming even popular tools like Stable Diffusion.

The process began with selecting 21 photos of myself, taken from different angles and situations, all shot on my phone. These images were cropped to 512x512 pixels, with no background, and properly tagged so that the model could learn as many details as possible about my face, body, and features. On Civit.ai, the system allows you to upload these images and use a feature called “auto-tagging,” which automatically adds basic descriptions to each image, like “beard,” “glasses,” “smiling,” and so on.

After preparing the dataset, the training started, and in about 44 minutes, the model was ready. From there, it was just a matter of making inferences, i.e., generating images based on the trained model.

The Reality We Are Living In

As funny as this experience was, it raises serious questions about the use of AIs for image manipulation. The ease with which an ordinary person, like me, can create hyper-realistic images means we are entering an era where trusting what we see will become increasingly difficult.

False images, precisely manipulated, can be used for various malicious purposes, such as blackmail, fraud, or even to ruin marriages and reputations. In my example, my family fell for an innocent prank, but imagine the consequences if this technology were used maliciously.

The Importance of Authentication and Protection

From this experience, it’s clear that we need to protect ourselves from possible scams using AI-generated images. One of the most effective methods I recommend is creating family passwords. If you receive a compromising message or photo that seems to involve a family member, having a password (a word, phrase, or answer to a question) that only family members know can be an essential step in verifying the authenticity of the communication.

In practice, when a criminal tries to use a cloned image or voice to deceive someone, you can quickly validate during the conversation whether you’re really speaking with your family member or a fraudster. If the person on the other end doesn’t know the password, it’s a sign something is wrong.

There’s no need to ask the person for the password; just agree beforehand on the answer to a simple question. This way, you can authenticate your family member without raising any suspicion.

Conclusion: The Future of AI and the Reality Ahead

What once seemed like science fiction is happening now. AI technology for image generation has reached a point where we can no longer blindly trust what we see or hear. While some people use these tools for fun and artistic creation, others will use them for crime. Therefore, it’s crucial to be prepared and informed about what’s happening.

If even my mom and siblings believed the photo with the bear was real, imagine the potential damage in situations where there’s no playful context. We are moving toward a future where reality and fiction blend in ways we never imagined.

The best way to deal with this? Information, dialogue with family and friends, and authentication methods. It has never been more important to verify what we see before acting on it.

Links:

ComfyUI: https://github.com/comfyanonymous/ComfyUI

ComfyUI workflow: https://civitai.com/models/618997?modelVersionId=702900

Civit.ai: https://civitai.com/

Flux.1-dev checkpoint: https://civitai.com/models/618692/flux